The LLM Stack: A New Paradigm in Tech Architecture

See companion blog here before reading this.

As seasoned developers, we’ve witnessed the ebb and flow of numerous tech stacks. From the meteoric rise of MERN to the gradual decline of AngularJS, and the innovative emergence of Jamstack, tech stacks have been the backbone of web development evolution.

Enter the LLM Stack - a cutting-edge tech stack designed to revolutionize how developers build and scale LLM (Large Language Model) applications.

Why a New Stack for LLM Applications?

LLM applications are deceptively simple to kickstart, but scaling them unveils a Pandora’s box of challenges:

- Platform Limitations: Traditional stacks struggle with the unique demands of LLM apps.

- Tooling Gaps: Existing tools often fall short in managing LLM-specific workflows.

- Observability Hurdles: Monitoring LLM performance requires specialized solutions.

- Security Concerns: LLMs introduce new vectors for data breaches and prompt injections.

To illustrate this evolution, we’ve created a companion blog that walks through a real-world LLM application’s journey from MVP to scalable product.

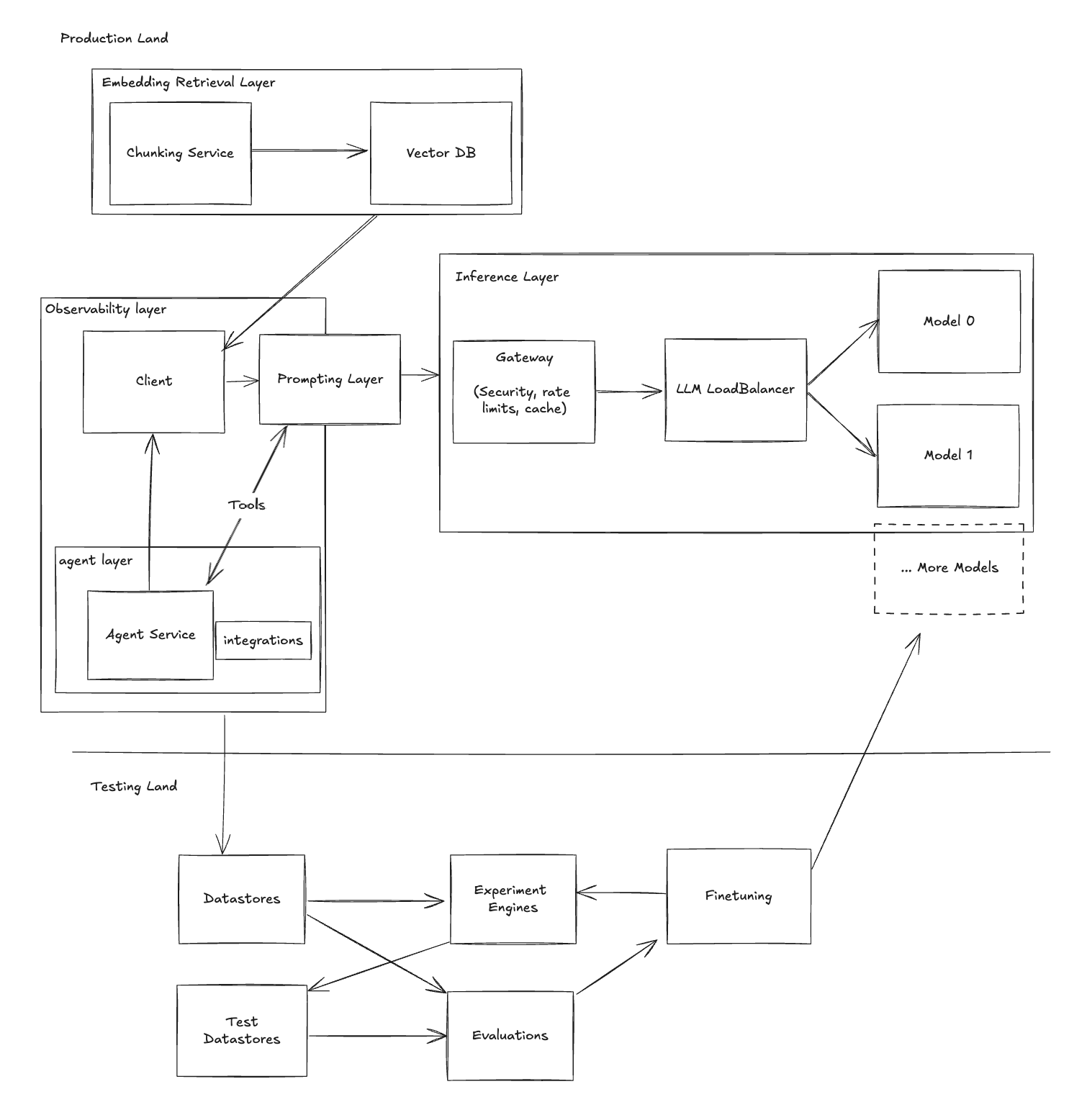

Anatomy of the LLM Stack

Let’s dissect the architecture of a robust LLM stack, based on our detailed example:

Note: that this article is not going to tell you what stack to choose, but rather outlines the components for building an LLM stack.

Key Components

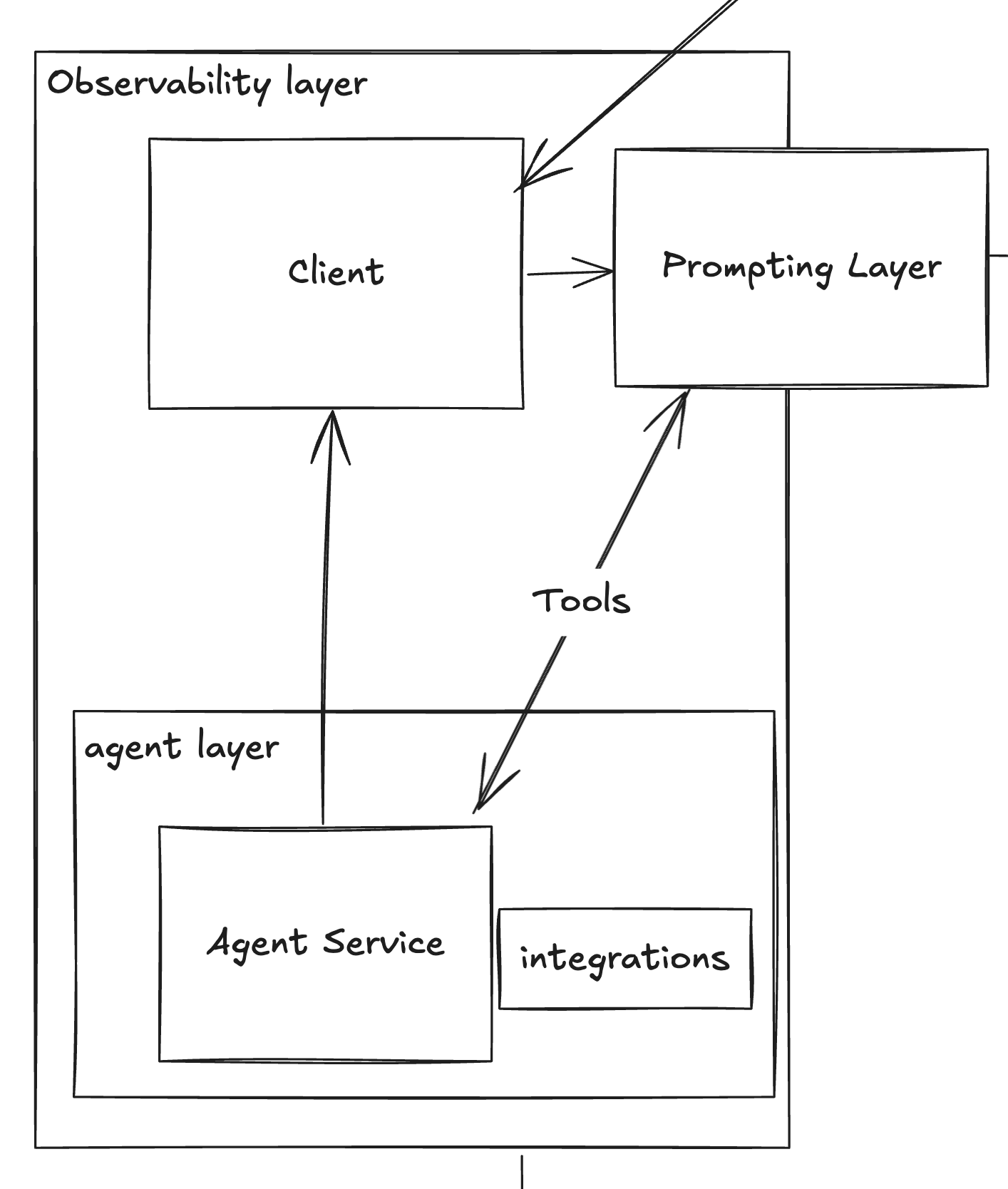

1. Observability Layer

| Service | Cutting-Edge Solutions |

|---|---|

| Observability | Helicone, LangSmith, LangFuse, Traceloop, PromptLayer, HoneyHive, AgentOps |

| Clients | LangChain, LiteLLM, LlamaIndex |

| Agents | CrewAI, AutoGPT |

| Prompting Layer | Helicone Prompting, PromptLayer, PromptFoo |

| Integrations | Zapier, LlamaIndex |

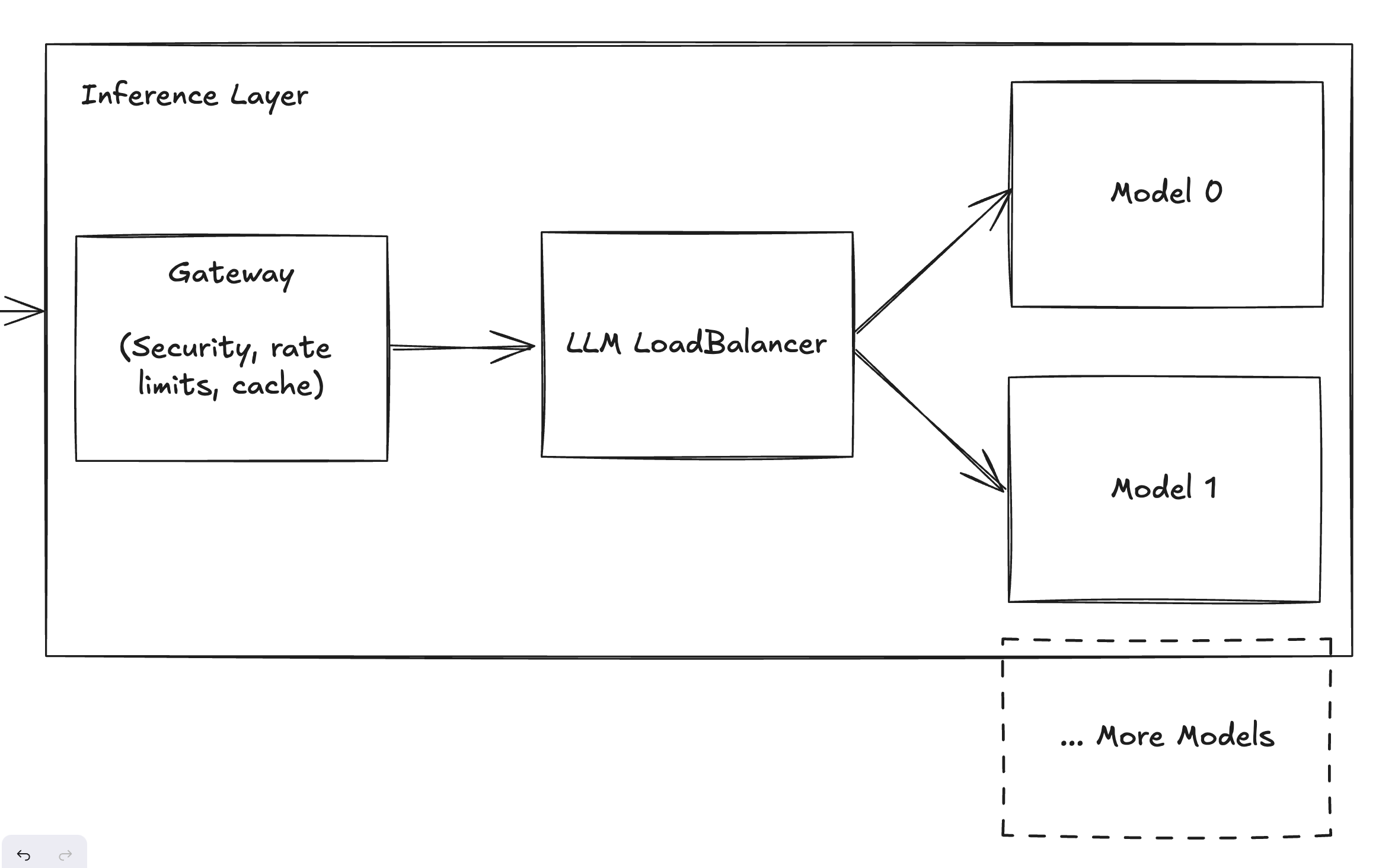

2. Inference Layer

| Service | Innovative Products |

|---|---|

| Gateway | Helicone Gateway, Cloudflare AI, PortKey, KeywordsAI |

| LLM Load Balancer | Martian, LiteLLM |

| Model Providers | OpenAI, Anthropic, TogetherAI, Anyscale, Groq |

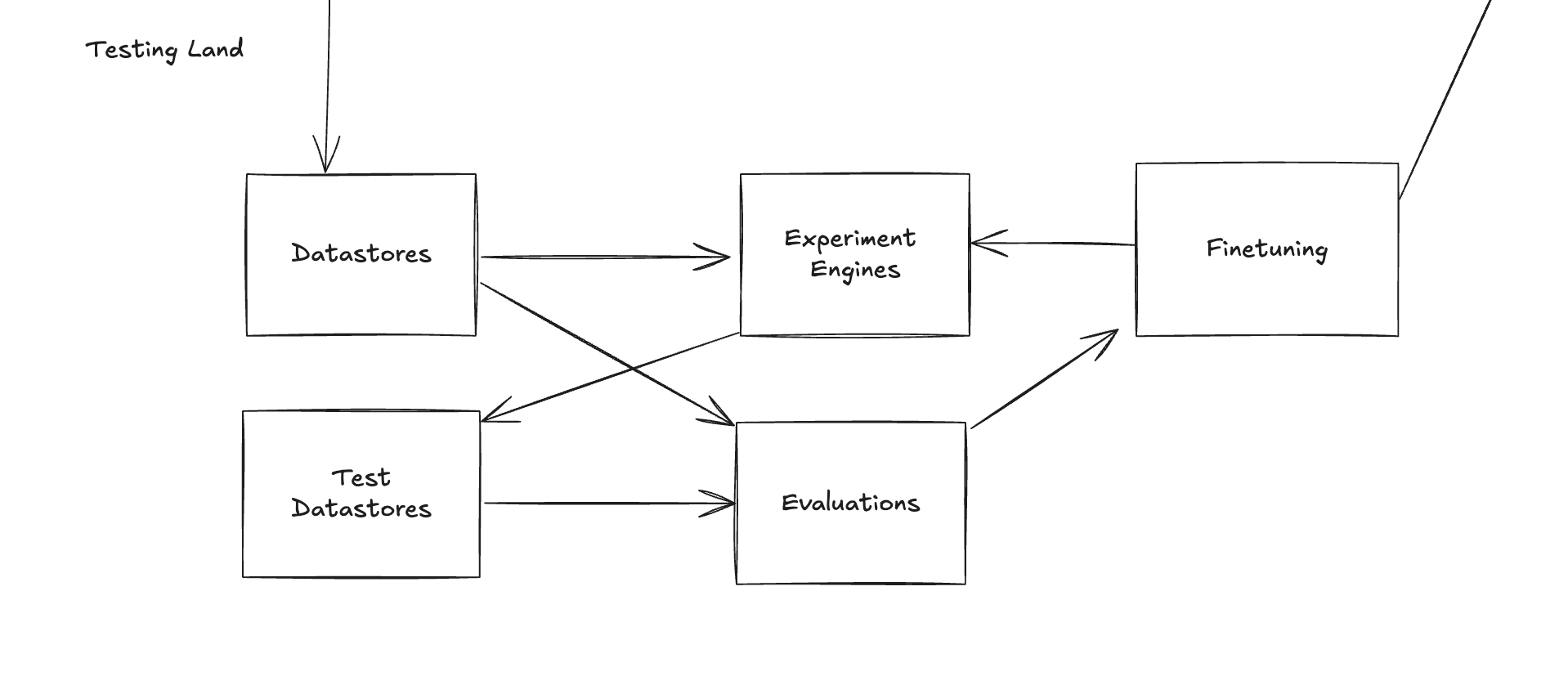

3. Testing & Experimentation Layer

| Service | Next-Gen Tools |

|---|---|

| Datastores | Helicone API, LangFuse, LangSmith |

| Experimentation | Helicone Experiments, Promptfoo, Braintrust |

| Evaluators | PromptFoo, Lastmile, BaseRun |

| Fine Tuning | OpenPipe, Autonomi |

Helicone: Your Gateway to LLM Excellence

Helicone isn’t just another tool in the LLM stack - it’s a game-changer. Our primary focus areas, Gateway and Observability, are crucial for building robust, scalable LLM applications.

![]()

By integrating Helicone into your LLM workflow, you’re not just adopting a tool; you’re embracing a philosophy of efficiency, scalability, and deep insights into your LLM applications.

Ready to supercharge your LLM development? Dive deeper into Helicone’s capabilities and see how it can transform your LLM stack today!